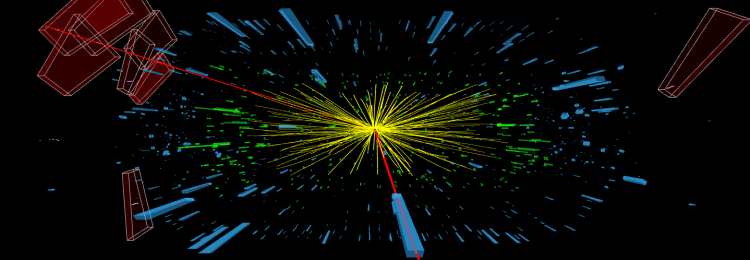

At the LHC, protons do not collide one at a time. Instead, the protons are combined into bunches, packages of more than 100,000 million (1011) protons. These bunches are then pointed at each other at the four collision points of the LHC. Even when the proton bunch is squeezed down to around the width of a human hair, protons are still over a billion times smaller than this, so individual protons will mostly end up missing each other. By using bunches, physicists ensure that depending on operation conditions of the LHC, on average, between 20 and 40 protons will end up colliding during a single bunch crossing.

Out of these collisions that happen, only a few are “head-on” high energy collisions that are capable of producing particles that are of interest to the physicists, such as the Higgs boson. The rest (and overwhelming majority) of collisions are low energy and result in well-known scattering processes, which are of little interest. These additional collisions create a noisy environment that is called pile-up.

To accurately compare the data to predictions by the Standard Model, the CMS collaboration traditionally uses simulated events. Here, using our best knowledge of the Standard Model of particle physics, tens of millions of events are generated. These events reflect what we expect to measure with the detector. As a result, important processes such as the creation of Higgs bosons, as well as the low energy pile-up events need to be modeled correctly.

Pile-up modeling represents a significant part of the simulation workflow. It requires a lot of computing time, while at the same time being very hard to model correctly. For some processes such as the decay of the Z boson into two tau leptons (Z⟶ττ), this problem can be circumvented by using a method developed and used by the CMS collaboration. The Z⟶ττ process is the primary background process in many analyses using tau leptons as final states, and therefore, accurate modeling of the process is crucial.

For the method, the physics property of lepton universality is being exploited. This property states that the coupling of carriers of the weak force (related to the exchange of a W and Z boson) to leptons such as the muon or the tau lepton is equal in strength. Muons are long-lived particles and will traverse the whole detector before decaying. Tau leptons, on the other hand, are heavier leptons that can decay into lighter leptons, hadrons, and even neutrinos.

When CMS physicists are interested in modeling Z⟶ττ events, it is useful to start by studying a very similar event signature, like the decay of a Z boson into muons (Z⟶μμ). The Standard Model predicts that Z⟶μμ and Z⟶ττ events occur with the same frequency under the same conditions. Pile-up and jets recoiling from the Z boson will be behaving identically in both events.

CMS physicists select Z⟶μμ events from collected data and remove the muons from the event. Two simulated tau lepton decays then replace the muons. Everything except the muons (e.g., pile-up and extra jets) is kept and used from the measured data, which saves simulation effort and guarantees a good description of these phenomena. This method is called the embedding method.

The method is split into four distinct parts, as shown in the figure below.

Figure 1: A schematic overview of the embedding method and its four steps.

In the first step, the selection step, Z⟶μμ events are selected from the data. As one of the crucial design criteria of the CMS detector is its exceptional reconstruction of muons, this selection can be made with high efficiency and precision.

In the second step, all detector deposits from muons are removed from the event. This is done in the inner tracking system, the electromagnetic and hadronic calorimeter, and the outer muon systems. As muons leave a tiny trace in the calorimeters, those can be cleaned with high purity. After this step, the cleaned event does not longer contain any fragments of the two initially selected muons.

The properties of the two muons that were selected in the first step can be used to simulate the decay of two tau leptons in the third step. Tau leptons will again decay into muons, electrons, or light hadrons. The decay of the tau lepton is a well-known physics process that has been observed since the late 1970s, and that can be simulated very accurately. This is the only step in the embedding method that uses simulation.

In the final step, the cleaned event of step two is combined with the simulated tau lepton decays to form a data-simulation hybrid collision. Apart from the two tau lepton decays, all event properties are taken directly from data.

Figure 2: Shown here is the number of reconstructed collisions per bunch crossing as reconstructed by the CMS detector. In the top plot, the estimation of the collected data (black dots) using the different contributing physics processes is shown. The main contribution, marked in yellow, comes from Z⟶ττ events and is estimated using the embedding method. The red line represents the estimation of Z⟶ττ events using regular simulation. In the lower part of the plot, the ratio between collected data and the two different estimates of Z⟶ττ events is shown, the black dots represent the estimation using the embedding method, and the red dots represent the estimation using simulation. The fact that the black dots are on a horizontal line and the red dots are not, shows how the embedding method makes the prediction independent from the number of pile-up events created by the LHC.

The embedding method achieves a much-improved description of Z⟶ττ events, and with much lower computational effort compared to using full simulation. The technique has an intrinsically better prediction of measured data when it comes to the description of jets and pile-up. This is shown in Fig. 2, where the expectation of the number of reconstructed collisions is compared to the observation. Here, events using the embedding method show a significantly better description than full simulation.

Events produced using the embedding method are now used by the CMS collaboration for analyses in which Z⟶ττ events make up a significant background, such as the analysis of Higgs boson decays. The Higgs boson also decays into tau leptons (H⟶ττ) but does not decay to muons and tau with the same probabilities, and is hard to distinguish from the same decay of the Z boson, making accurate modeling of this background extremely important.

The embedding method is essential for high precision measurements of the Higgs sector, as many observables that are sensitive to the Higgs decay signature are modelled much better when comparing embedded events to regular simulation.

For the planned High-Luminosity LHC upgrade, a typical pile-up of 140–200 collisions per bunch crossing is expected. The simulation of these collisions will pose an important challenge both due to the complexity of accurate modeling and the computational effort. Data-driven methods, such as this embedding method, which circumvent these challenges, will thus play a vital role in future analyses.

Read more about this and other results in the CMS Physics Analysis Summaries:

- Log in to post comments